Projects

High-Resolution Hyperspectral Ground Mapping for Robotic Vision (2018)

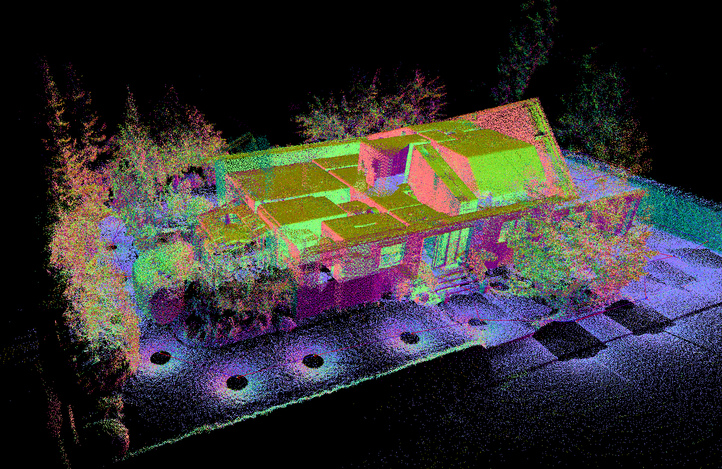

Abstract: Recently released hyperspectral cameras use large, mosaiced filter patterns to capture different ranges of the light’s spectrum in each of the camera’s pixels. Spectral information is sparse, as it is not fully available in each location. We propose an online method that avoids explicit demosaicing of camera images by fusing raw, unprocessed, hyperspectral camera frames inside an ego-centric ground surface map. It is represented as a multilayer heightmap data structure, whose geometry is estimated by combining a visual odometry system with either dense 3D reconstruction or 3D laser data. We use a publicly available dataset to show that our approach is capable of constructing an accurate hyperspectral representation of the surface surrounding the vehicle. We show that in many cases our approach increases spatial resolution over a demosaicing approach, while providing the same amount of spectral information.

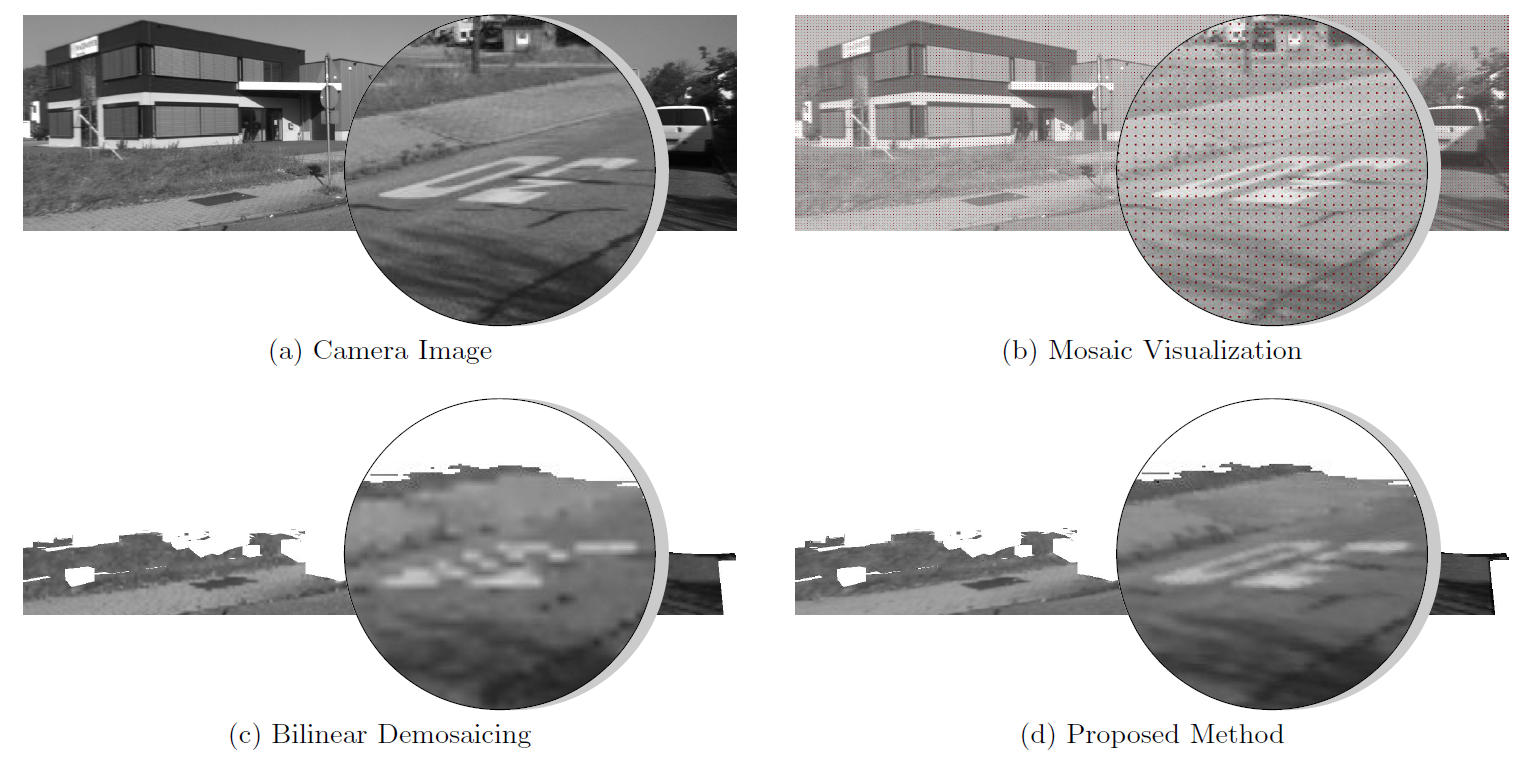

The following image shows a raw image from a hyperspectral camera (Ximea MQ022HG-IM-SM4X4-VIS). Note the strong, tiled

4 × 4 filter pattern that is visible throughout the image (magnified on the left).

Our approach fuses the individual spectral layers within the texture heightmap of the environment over time. We do this by running a visual odometry algorithm and subsequent projection of the images onto a heightmap. The following image shows the achieved quality by backprojecting the reconstructed heightmaps to a camera image. A single spectral channel (pixels under the red pixels in (b)) is taken from the input camera image (a). The quality of the backprojection of the ground heightmaps is shown for both bilinear demosaicing (c) and our method (d). KITTI odometry dataset #16, frame #222 is used here.

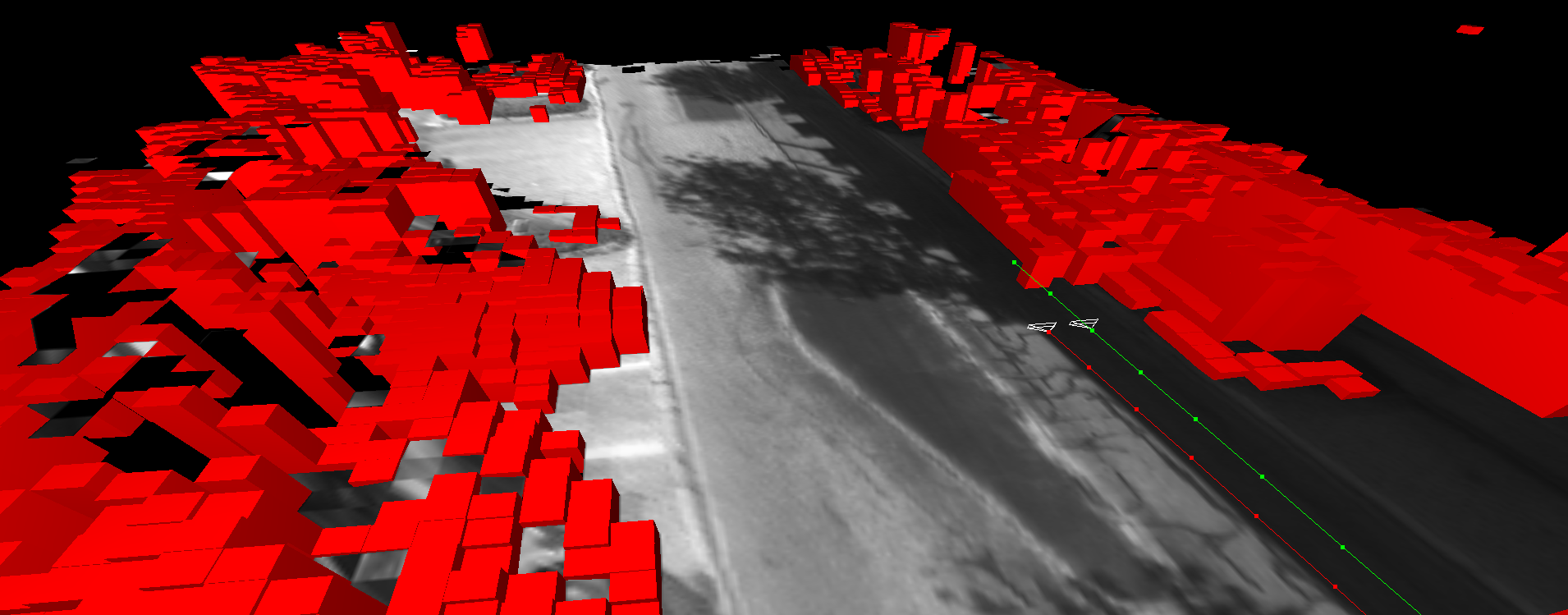

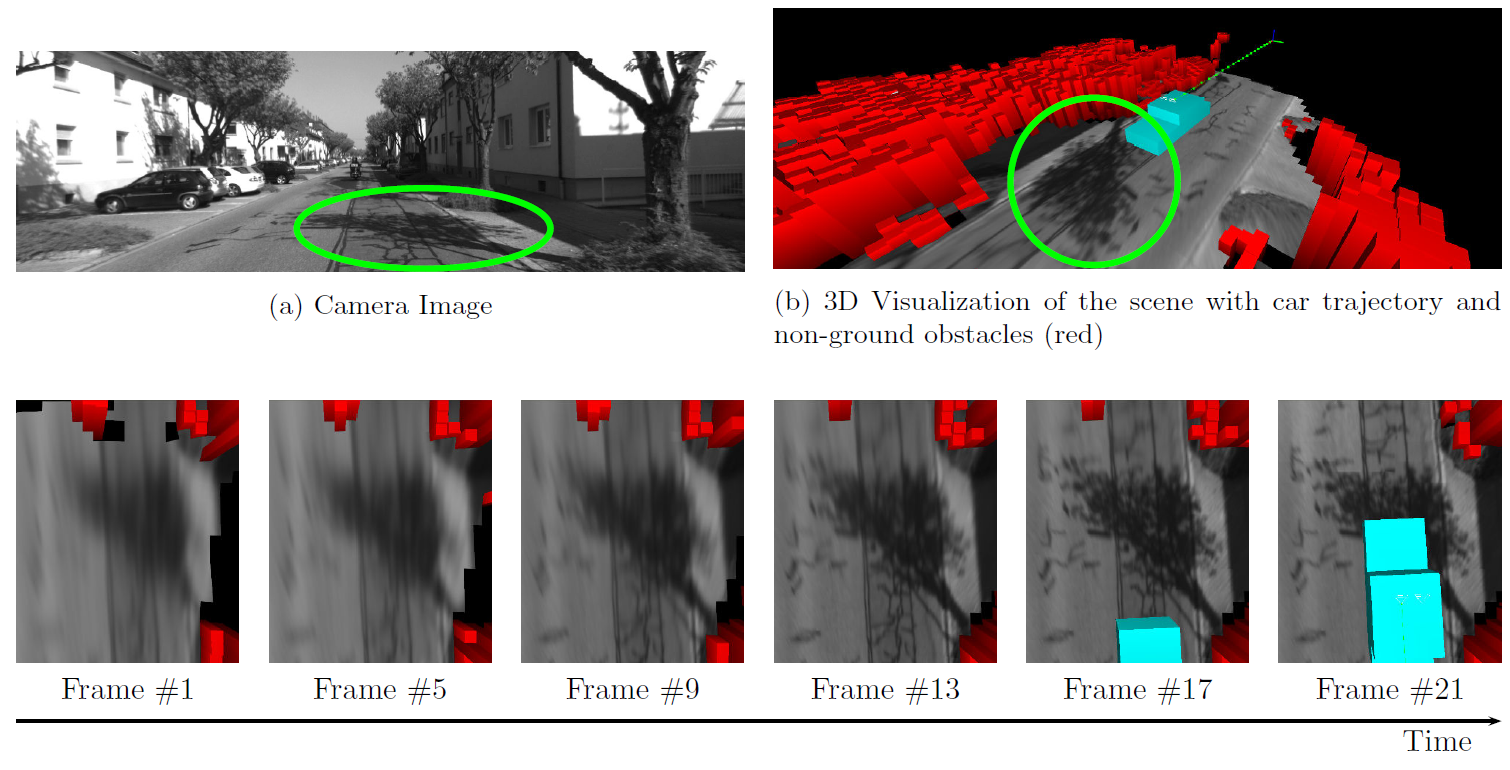

Details in the spectral texture increase over time. As more camera frames are being integrated, the

spatial resolution inside of the ground surface maps increases. This experiment was performed using the KITTI

odometry dataset #1)

Multi DOF Kinematic Calibration (2016)

This software allows the accurate calibration of geometric/kinematic transformation hierarchies from sensor data. It is possible to calibrate/estimate

- unknown robot locations,

- unknown relative poses within the transformation hierarchy (for example the relative pose between two cameras),

- and unknown 1-DOF hinge joints, including the rotational axes as well as a mapping from raw encoder values to angles.

Prior to optimization, hierarchies have to be modeled in a simple JSON file format, which is passed to the optimizer. The latter will then try to optimize the problem my minimizing reprojection errors to known configurations of markers, or point-to-plane metrical errors of laser points to (planar) marker surfaces.

For both, the calibration of cameras and the calibration of laser range finders, known 3D reference geometry is required. We establish this reference making use of our visual_marker_mapping toolkit.

Our full code, documentation and examples are available on [Github

](https://github.com/cfneuhaus/multi_dof_kinematic_calibration).

Visual Marker Mapping (2016)

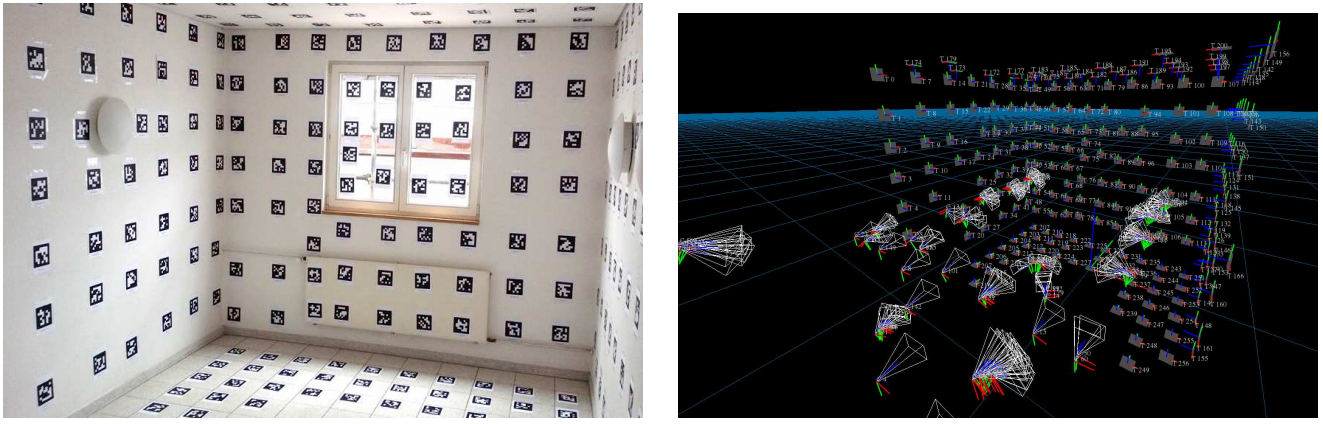

Together with my students Stephan Manthe and Lukas Debald, I developed a toolkit for the accurate 3D reconstruction of configurations of markers from camera images. It uses AprilTags by Olson, that can simply be printed out and attached to walls or objects. The reconstructed markers can be used for subsequent algorithms, that need 3D reference geometry for example.

Together with my students Stephan Manthe and Lukas Debald, I developed a toolkit for the accurate 3D reconstruction of configurations of markers from camera images. It uses AprilTags by Olson, that can simply be printed out and attached to walls or objects. The reconstructed markers can be used for subsequent algorithms, that need 3D reference geometry for example.

Our full code, documentation and examples are available on [Github

](https://github.com/cfneuhaus/visual_marker_mapping).

DLR Spacebot Cup (2015)

In 2015 our Team participated in the DLR Spacebot Cup/Camp. It was a competition by the German Aerospace Center.

The following video was the presentation of our team, that was produced by the DLR.

The next video shows our highlights of our participation in the actual competition:

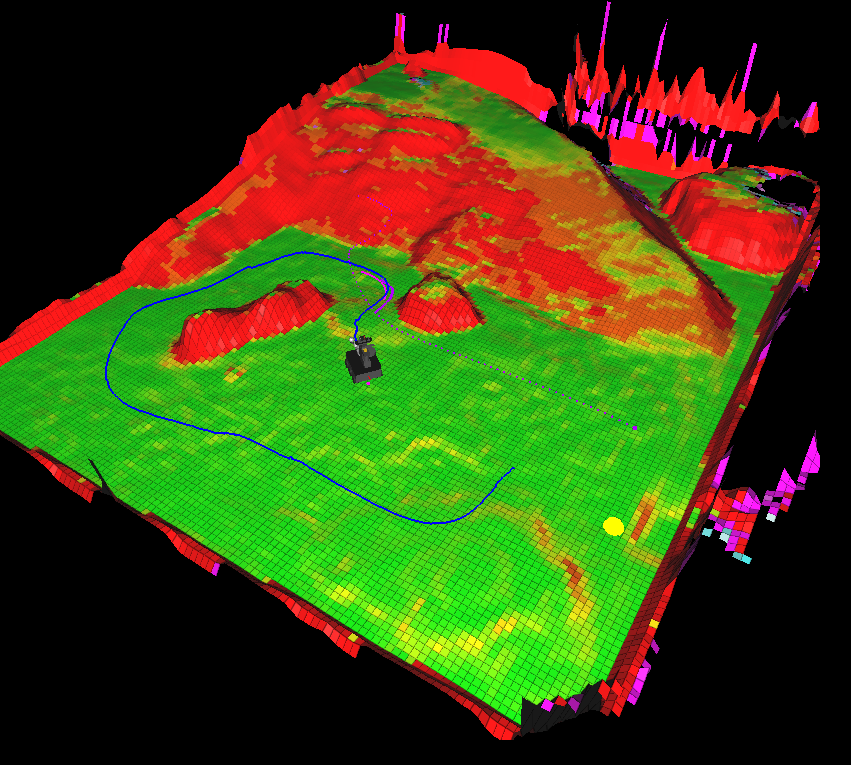

The following Figure shows the map built by our robot during the competition.

Arena

During development, we also mapped our test arena. The results can be seen in this video:

Diploma Thesis (2011)

My diploma thesis titled “A Full 2D/3D GraphSLAM System for Globally Consistent Mapping based on Manifolds” can be downloaded here:

My diploma thesis titled “A Full 2D/3D GraphSLAM System for Globally Consistent Mapping based on Manifolds” can be downloaded here:

Abstract: Robotics research today is primarily about enabling autonomous, mobile robots to seamlessly interact with arbitrary, previously unknown environments. One of the most basic problems to be solved in this context is the question of where the robot is, and what the world around it, and in previously visited places looks like – the so-called simultaneous localization and mapping (SLAM) problem. We present a GraphSLAM system, which is a graph-based approach to this problem. This system consists of a frontend and a backend: The frontend’s task is to incrementally construct a graph from the sensor data that models the spatial relationship between measurements. These measurements may be contradicting and therefore the graph is inconsistent in general. The backend is responsible for optimizing this graph, i. e. finding a configuration of the nodes that is least contradicting. The nodes represent poses, which do not form a regular vector space due to the contained rotations. We respect this fact by treating them as what they really are mathematically: manifolds. This leads to a very efficient and elegant optimization algorithm.